|

| Yeah, it looks just like this. |

What is it?

Briefly, Moonshot is a miniature blade enclosure. I'm sure there are marketing folks who would like me to use different terminology, but it boils down to servers, Ethernet switches and power all rolled into one box.

There are some key differentiators between this enclosure and some of its larger cousins:

Low Power - The whole package is tuned for high density and low power. There are no monstrously fast multi-socket servers available, but the density is amazing. With 8 cores per node and 180 nodes per chassis we're talking about 300+ cores per rack unit!

Less Redundancy - Unlike the C-class enclosures which sport redundant "Onboard Administrator" modules, Moonshot has a single "Chassis Manager". I do not view this as a problem for two reasons: First, Moonshot is mostly suited for massively horizontally scalable applications which should tolerate failure of a whole chassis. Second, failure of a Chassis Manager module doesn't impact running services. Rather, it becomes impossible to reconfigure things until the fault is repaired.

Less Flexibility - Unlike C-class, which have lots of options in terms of server blade accessories and communications (flex-mumble, many NICs, FC switching, etc...), Moonshot is pretty much fixed-configuration. On my cartridges, the only order-time configurable hardware option is a choice between a handful of hard drive offerings. Until recently, HP didn't even support mixing of server cartridge types within a chassis. The only extra-chassis communication mechanism is via a pair of Ethernet switches. Only three switch models are currently shipping, and there's not much choice involved: Switch selection is largely driven by the cartridge selection, which is driven by your intended workload.

Limited Storage - The only storage option currently available is the single mechanical or flash SSD built into each compute node. Storage blades exist (I've seen them on "Moonshot University" videos), but they don't seem to be shipping products quite yet.

2D Toroid Mesh - Moonshot has a cool cartridge-to-cartridge communications mesh built into the chassis. This mesh is in addition to the Ethernet path between cartridges and switches. My cartridges cannot leverage this feature at all, but I'm sure it's wonderful for the right workloads on the right cartridges.

Low Cost - The list above looks kinda negative. I don't mean for it to be. For all of the fancy stuff you don't get with Moonshot, the pricing is pretty compelling.

My Hardware Configuration

I've got access to the following gear:

- Moonshot 1500 Chasis w/management module

- Moonshot m300 server cartridges, each with a single 8-core Atom C2750 SoC

- Moonshot 45G switches (2 per chassis)

Physical Package

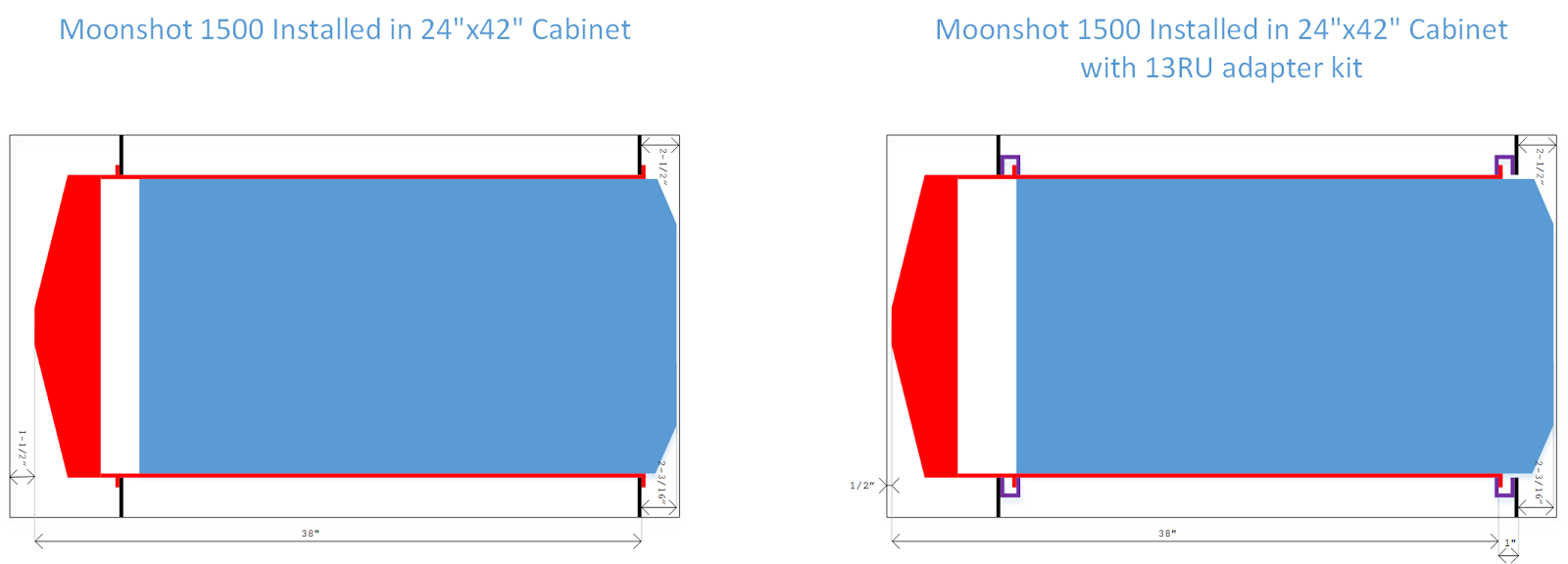

This box is big. Really big. It will fit into a standard 42" deep server cabinet, but only just barely, and only if the cabinet is set up with the front rails/posts in exactly the right spot.

Measuring from the front surface of the cabinet's mounting posts, a single Moonshot chassis protrudes about 2-3/16" toward the cold aisle door, and 38" (but it could be 39" - see below) toward the hot aisle door. The 38" dimension doesn't reflect the length of the chassis, but rather the rearward protrusion of the mounting rails and cable management hardware. The forward mounting posts need to be about 2 1/2" from the front of the cabinet (putting the server faceplate right up against the door mesh) for things to work out in a 42" cabinet.

The rails telescope in the way you'd expect. Their working depth range is 25-3/16" to 34-3/16".

The rails telescope in the way you'd expect. Their working depth range is 25-3/16" to 34-3/16".

The box is 4.3 RU tall. The rack hardware that comes with moonshot aligns the bottom of the chassis with a rack unit, and puts the "extra" .3RU at the top of the chassis, never at the bottom. HP is proud to tell you that it's possible to get 3 chassis in 13RU, or up to 10 chassis / 1800 compute nodes per cabinet. That tight packaging is only possible with accessory part 681677-B21. For some reason, the accessory isn't available for purchase a-la-carte, but rather only when purchasing a pile of Moonshots along with HP cabinets (!?). HP have told me that the sale restriction on the 13U adapter will be lifted, but I don't know whether it has happened yet. Watch out for that.

The adapter (HP part 681677-B21) is essentially four 22-3/4" long (13 rack units) "C" channels with two sets of mounting holes. You mount these four channels inboard (behind) each of the four mounting posts in the server cabinet. One set of holes is spaced in the usual fashion. These fix the channels to your cabinet. The other set of holes accept the Moonshot rails and are offset so that the Moonshot chassis are packed tight against each other.

Because the 13U adapter rails mount inboard of the cabinet posts, they don't impact the location of the chassis in the cabinet at all. The cold side protrusion of the chassis is still about 2-3/16" They do, however move the server's rails and cable management stuff about an inch toward the hot side of the cabinet because the rails are now attached to the "C" channel, rather than the front of the cabinet. This increases the rearward protrusion from 38" to 39" and makes the whole package a bit over 41" long. It'll fit in a 42" cabinet, but only just.

Management Network

The Chassis Manager module has two 1000BASE-T connectors ("iLO" and "Link") and a serial port (115200,8,n,1). By default, the "Link" connector is disabled. The intention is for you to daisy-chain the the "iLO" and "Link" interfaces of several chassis, hanging them all from a single management switch port. These interfaces lead to an intra-chassis bridge which does not run spanning tree. You can enable the link connector and attach to two management switch ports if desired. The management switch ports will see each other's BPDUs, causing one of them to block with this configuration. You'll reach the Chassis Manager and the switch(es) or switch stack through these cables. The cartridges (mine anyway) do not have individual iLO addresses. It is possible to manage the switches in-band as well, in which case only the Chassis Manager use these cables.

Virtual Serial Ports

The switches and the servers each have a "virtual serial port" which can be accessed from the Chassis Manager, effectively turning the Chassis Manager into a serial terminal server. There are some things to know here:

- The Chassis Manager might be supporting 180 compute nodes and 2 switches. That's 182 connections at 115200bps each, almost 21Mb/s in aggregate. There's a possibility that it can't keep up and will lose characters here and there.

- A maximum of 10 SSH VSP sessions are supported at a time. I suspect this is related to the point above.

- Compute node serial ports are available via IPMI 2.0-sytle Serial Over LAN. As far as I can tell, that is not possible with the switch console VSPs.

- The switches don't send everything to the CM's serial interface. Some early boot time stuff (including the ability to interrupt boot) goes only to the physical serial port on the switch uplink module.

- I initially planned to use the VSP console, but have since switched to the physical console port.

Switching OS

The 45G and 180G switches run Broadcom FASTPATH, while the 45XGc runs Comware. I have not had a good experience with the FASTPATH OS on these switches. Apparently lots of customers are running it without issue, so this may be a YMMV thing.

Power

The Moonshot chassis supports up to four power supplies. Some combinations of cartridges and switches require three power supplies for normal operation, and all four power supplies for N+1 redundancy. This is a problem because most server cabinets (and the power distribution equipment upstream of the cabinet) are set up to offer only A/B redundant feeds. There's no way to supply power in a fashion that meets the chassis 3+1 requirement without getting into hokey stuff like automatic transfer switches. I asked HP about this, they recommended an in-cabinet UPS or two. Apparently the recommendation was not a joke.

Cartridge Mix/Match

Until recently, HP would only sell cartridges in bundles of 15, and only supported homogenous cartridge configurations. Mix/match of cartridge types within a chassis was not allowed. For the workload in my environment, it'd be handy to have a small handful of Xeon-based cartridges blended in with the Atom population, and HP tell me that the restriction has been lifted! Cartridges are now available a-la-carte, and we're free to mix/match with the following constraints in mind:

- Some cartridges have four independent compute nodes onboard. These cartridges present 4 network interfaces to each switch, so they require a 180-port switch (model 180G is the only option at this time).

- Some single-node cartridges have 10Gb/s-capable NICs. These interfaces will run at 1Gb/s, so they can be used with any of the three currently available switches.

- The cartridge-facing interfaces on the 10Gb/s 45XGc switch can do 1Gb/s or 10Gb/s, so they'll support any single-node cartridge.

- HP recommend that cartridges with mechanical drives be installed toward the front of the chassis so that they'll get the coolest airflow.

Noise

This box is super noisy when the fans get excited. There's an optical sensor on the switch modules which forces the fans to full speed when the lid is removed. I found that a shiny thing set on top of the switch fools the sensor into believing the lid is in place, and the fans calm down. Probably not great for cooling, but handy if the phone rings while the lid is off of the enclosure.

Uplink Modules

Uplink ports on the 6xSFP+ uplink modules in my chassis operate at 1Gb/s or 10Gb/s, and they do not require vendor-coded transceivers. Several transceivers are officially supported by HP, the most affordable of which come from the Procurve family.

The QSFP-based uplink modules support a cable which breaks out into four SFP+ interfaces. The SFP+ interfaces on these breakout cables do not support 1Gb/s operation.

The switch console ports integrated in the uplink module are pinned like Cisco consoles, and operate at 115200,8,n,1.

Cartridges

The m300 cartridges don't have many knobs or levers. There's no graphical console. It's serial only. The only boot-time prompts are to access the boot ROM menu on the onboard NICs, and the only options there relate to whether you'll see the "press CTRL+S" message, and for how long. That's it.

From the Chassis Manager's web interface (or via IPMI) you can power cartridges on and off, control whether they boot from local HDD or via PXE, enable/disable WoL support, and control serial console redirection. That's about the extent of what you can do cartridge configuration-wise. There's no BIOS with endless confusing options here. It's dead simple.

Update 5/15/2015

I've got an RMA m300 cartridge on my desk, and just noticed that it has a 42mm M-keyed M.2 slot. This slot isn't mentioned in any quick specs / data sheet / compatibility guide that I can find, but HP tell me that it's possible to order m300 cartridges with the following M.2 storage options:

765849-B21

|

FIO

|

HP Moonshot 64G SATA VE M.2 2242 FIO Kit

|

749892-B21

|

FIO

|

HP Moonshot 32G SATA VE M.2 2242 FIO Kit

|

Thanks for posting this. We are a small shop that likes to be on the ride edge of the technology curve and are considering moonshot, but just not sure yet whether it's the right way to go. The n+1 power is a mess. We have the typical a/b power and we've lost a circuit to maintenance before. We can't have down time.

ReplyDeleteThe 1.30 revision of the chassis manager includes a new 'chassis powercap mode' which purports to address this problem. My issue with this mode (all of the modes, for that matter), is that I've yet to see a good explanation of how "capping" is applied to nodes, nor the implications of "throttling" power to the nodes during an incident.

DeleteThis was really an interesting topic and I kinda agree with what you have mentioned here!moonshots

ReplyDeleteThis was a llovely blog post

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis is old but still interesting. I got 2 coming through my office today. I read the whole thing, pretty interesting. Mine's got som M710X cartridge. You mention yours don't have a BIOS. From the iLO management on the box you can turn on and off each cartridge, and also connect to IRC using HTML5, and here I can see the cartridge booting up just like any normal HP server. It has a BIOS in which you can do many things. No iLO configuration available though, and to access the cartridges I use the same IP as the box, just a different port.

ReplyDeleteThis made it quick and easy to return all the cartridges to default, in case anyone else needs that information.